See THIS POST

Notice- the 2,000 upvotes?

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

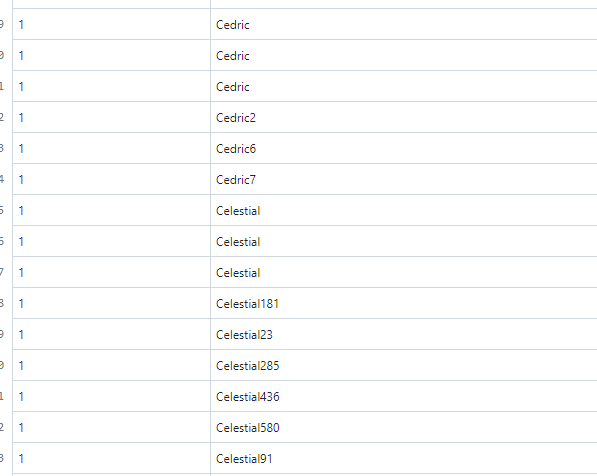

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

Just wanted to point out that according to your stats, unless I don’t understand them well, only 26 bots come from lemmy.world (which has open sign-ups, and uses the “easy to break” (/s) captcha) and 16 from lemmy.ml (which doesn’t have open sign-ups and relies on manual approvals).

For some perspective, lemmy.world has almost 48k users right now. Speaking of “corrective action” is a bit of a stretch IMO.

This post isn’t about lemmy.world, nor am I blaming lemmy.world!

I am trying to drag in the admins of the big instances, to come up with a collective plan to address this issue.

There isn’t a single instance causing this problems. The bots are distributed amongst normal users, in normal instances.

WIth- the exception of a instance or two with nothing but bot traffic.

I’m just saying that context and scale matter. If an anti-spam solution is 99% effective, then chances are that on an instance with 100k users you are still going to have around 1k bots that have bypassed it.

Your right- But, the problem is-

At a fediverse-level, we don’t really have ANY spam prevention currently.

Lets assume, at an instance level, all admins do their part, enable applicant approvals, enable captchas, email verification, and EVERY TOOL they have at their disposal.

There is NOTHING stopping these bots from just creating new instances, and using those.

Keep focused on the problem- the problem, is platform-wide lack of the ability to prevent bots.

I don’t agree with the beehaw approach, of bulk-defederation, as such, a better solution is needed.

The beehaw approach wasn’t “bulk defederation”. They blocked two Lemmy instances they were having trouble with. The bulk of their block list are Mastodon and Pleroma instances well known for trolling other sites and stirring up shit.

Edit: Autocomplete refuses to accept that I talk a lot about federation and defederating, and is desperately trying to convince me I’m talking about anything else that states with “de”.

https://beehaw.org/instances

While- the majority of their instances do appear to be potentially quite noisy/potentially bad- there are quite a few, very large, well known instances on their defederation list.

For example- a percentage of the individual IN THIS THREAD, are on instances defederated from beehaw.

I didn’t say they blocked few people. I said they blocked few websites.

Lemmygrad is full of agitators, and Lemmy.world and SJW have, from my experiences, a disproportionate number of people who reject communal solutions to communal issues, while still feeling entitled to access to communal spaces.

Meanwhile, other large sites, like Lemmy.ml and kbin.social, and smaller regional sites, such as Midwest.social, Lemmy.ca, and feddit.uk, are federation with them just fine.

That doesn’t sound like mass defederating to me.

That sounds targeted.

Some older federated services, like IRC, had to drop open federation early in their history to prevent abusive instances from cropping up constantly, and instead became multiple different federations with different policies.

That’s one way this service might develop. Not necessarily, but it’s gotta be on the table.

I read somewhere that mastodon prevents this by requiring a real domain to federate with. This would make it costly for bots to spin up their own instances in bulk. This solution could be expanded to require domains of a certain “status” to allow federation. For example, newly created domains might be blacklisted by default.

I remember back in the days of playing world of warcraft- The botters / gold sellers would be banned pretty often.

However- they would be back the next day botting again, despite having to buy another 50$ account.

The problem was- the profits they were able to make, far outweighed the 50$ price of entry.

Likewise- playing minecraft, with trolls/griefers/etc- the same thing would occur. You could ban somebody, and they would just show up with a new account for an hour earlier. In this case- there wasn’t even the option of financial gain- just a dedicated troll

Do note, also, domains are very cheap. Some of the more obscure TLDs are less then 5$. lemmyonline.com, costed me 12$, a week ago.

I think that might help- but, I don’t think that would be the end-all, be-all solution. Especially since many scammers/bot owners already have dozens, if not HUNDREDs of domains sitting aside of nefarious purposes.

If “botters” are willing to spend >$5 per bot on established instances, then I don’t believe this is a solveable problem. For the fediverse, or for ANY platform, Reddit included. I am perfectly human, and would be hard-pressed to decline a >$150/hour “job” to create accounts on someone’s behalf.

Like any other online community, constant vigilant moderation is the only way to resolve this. I don’t see how Lemmy is in any worse position than Reddit so I don’t think we need to be all “doom and gloom” quite yet.

As for botters creating their own instances…

This is just a start. Federation allows for many techniques to solve this. Perhaps even a “Fediverse Universal Whitelist” with an application process. I’m excited for the possibilities, but again I don’t think it’s quite time to be overly concerned yet. These are solvable problems.

The downside we have on lemmy, compared to reddit-

In reddit, all accounts go through a single sign-up method. They have one advantage of being able to block based on IP, Email TLD, and other methods.

While- none of those methods are absolute, and all can be easily circumvented- they do have a central location for studying the data to determine how to better prevent the issues in the future.

Here in lemmy-land, that isn’t the case. As a instance admin, I can block you. I can block your email. I can block your IP. I can block your entire countries IP ranges, and ASNs. But- there is nothing stopping you from turning around, and doing the same thing on any of the other instances, as they have no idea of the actions I just performed.

db0 has a project he has been working on, which appears might fill this gap. LINK TO HIS COMMENT

I think this would be a good start.